Naive Bayes Classification

Do you need to take an umbrella with you to work today? Can you predict if it will rain or not just by looking at historical data and weather observations to predict if it will rain ?

This is the idea behind a Naive Bayes classification, to be able to predict the class, will it rain or not, given the past observations, historical weather data taken at the times when it has and has not rained.

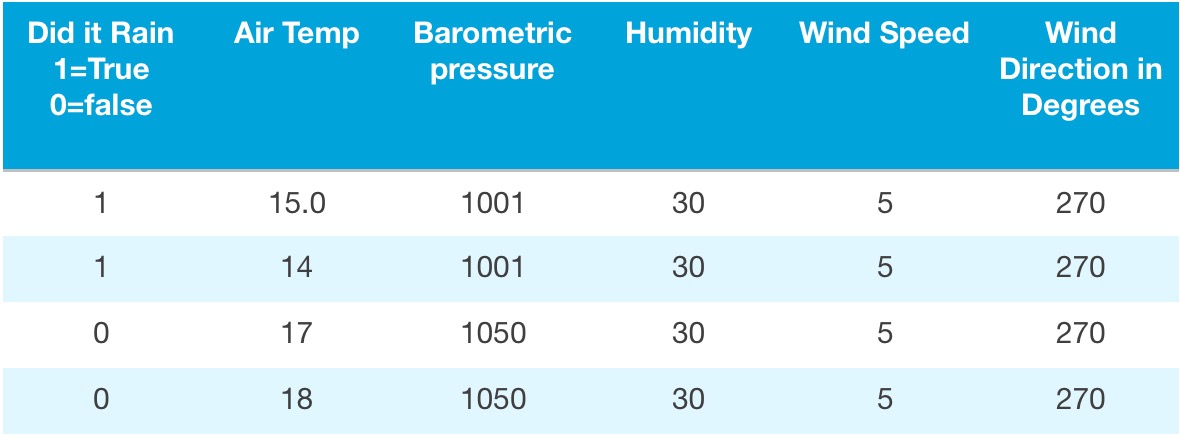

The idea behind Naive Bayes is too look at past observations in order to predict the future. The prediction is itself derived from the Attributes or feature set of historical observations. In our weather example this would be all the data we have for times when it has both rained and it has not rained. These attributes or features would include Humidity, barometric pressure, humidity, as well as other attributes. To summarise we could collect the data in a table as follows.

This a simplistic non real world idea to introduce the topic however real world examples would include SPAM detection in e-mail and Network intrusion analysis among others.

This a simplistic non real world idea to introduce the topic however real world examples would include SPAM detection in e-mail and Network intrusion analysis among others.You would also have a fairly large data set extending to possibly thousands of rows, as the more rows with the more data you have the more accurate your data set.

Continuous variables

You may have noticed in the above weather example I used only numbers, to be more exact, I only used values that could implicitly be converted to Doubles because the Spark MLLib only accepts Doubles when training the Naive Bayes model. In general you would need to either discretize continuous attributes or convert them to a Probability Density function.

Why is it Naive ?

You may have noticed that this is called Naive Bayes and you maybe wondering why. This is all down to the idea that each of the attributes we use will be treated as independent factors by the model when calculating the probability, but you may have realised that in fact there is a correlation between the attributes which in the model is not really accounted for. The model however is still a fairly good, and quick prediction model once trained, and the independence of actual correlated factors does not affect the out come to any significant degree.

How does Naive Bays work ?

The idea behind the model is a prediction on past observations. The formula is give as

This formula says the probability of the prediction or Hypothesis H given E which are the various attributes like Barometric, Humidity etc.. represented as p[H|E] is equal to the probability of p[H|E] times the probability of H the hypothesis over the probability of E which is the attributes.

so p[H|E] is the prediction not forgetting that E is really the attributes Temperature, Barometric, Humidity etc that itself could be converted to a Vector. so the probability of the Hypothesis using our Vector of Attributes is the prediction.

Then using this Data set represented by Hypothesis, Vector of attributes we can create a file that looks something like this

1,15.0 1001 30 5 270

1,14.0 1001 30 5 270

1,13.0 1001 30 5 270

0,19.0 1001 30 5 270

You can see that the Hypothesis is 1 or 0 meaning it will rain or not rain and the 5 columns are the Vector of Attributes/Features or E discussed above representing the attributes. hence [H|E] whose probability is the prediction.

Now we have all the data and information we need to be able to predict if it will rain or not given the current weather conditions.

We have our Historic data rows providing lost of observations.

We have the current conditions say today’s data is

15.0 1001 30 5 270

So can the model predict if under these conditions I see today, outside my window, if it will rain or not rain ?

Steps to Training your Model

There are a few steps you need to do in order to get predictions from your model.

Prepare your data: discretize where needed and format all the data into Doubles

Prepare an Input file.

Split your dataset: you need a Training and Testing data sets.

Create the model.

Test the accuracy of the model.

Try out our own observations: by plugging in todays data and seeing what the model predicts.

Lets look at each one of these steps in Detail.

Prepare your data

You need to make sure your data is ready. In data science this is known as Data preparation. For this model we know that we need two things the Observation of whether it did or did not rain given the conditions. This is the 1 or 0 we use to represent if in those conditions in the past it rained or not. Then we need a set of attributes like Temperature, Barometric and Humidity that we can represent as a Vector of Data. We can then use these two items to create our input file.

Prepare an Input file

The input file contains two items the did it rain variable and the Feature vector set this can be represented in a file as two pieces of data separated by a comma such as

1,15.0 1001 30 5 270

1,14.0 1001 30 5 270

1,13.0 1001 30 5 270

0,19.0 1001 30 5 270

…

Your data set would be considerably larger or your accuracy would be good enough for prediction.

Once you have a file you need to load it and prepare it for use as follows:

val data = sc.textFile("data/artifacts/naive_bayes.txt")

//data is:1|0,

* 1,15.0 1001 30 5 270

1,14.0 1001 30 5 270

1,13.0 1001 30 5 270

1,12.0 1001 30 5 270

0,11 1050 30 5 270

0,16.0 1050 30 5 270

0,17.0 1050 30 5 270

0,18.0 1050 30 5 270

*/

val parsedData = data.map { line =>

val parts = line.split(',')

LabeledPoint(parts(0).toDouble, Vectors.dense(parts(1).split(' ').map(_.toDouble)))

}

Notice here we get a parsedData set containing the pair (LabeledPoint, Vector ) or ( Did it Rain, Vector of Features )

Split your dataset

When creating the model you need to take your data set form your file and split it into two distinct data sets. 60% of your dataset will be used to train the model and the other 40% would be used to test the accuracy of your model.

you split the model as follows:

// Split data into training (60%) and test (40%).

val splits = parsedData.randomSplit(Array(0.6, 0.4), seed = 11L)

val training = splits(0)

val test = splits(1)

Create the model.

Now you have a training and test set we create the model that we can use for prediction.

val model = NaiveBayes.train(training, lambda = 1.0, modelType = "multinomial")

You may have noticed two parameters here that may need further explanation.

Lambda

This is set to 1 because we need to account for the fact that the weather conditions today that we ask to test against the model may not be in the training data set. The probability will end up as 0 if we don't add in the Additive smoothing property to account for data that may not be into training set.

modelType

Type can be “multinomial” which is default or the “bernoulli”.

The main difference is the Bernouilli model does not keep track of the number of occurrences of the features in the feature set. When using the bernoulli model features can not be negative.

Test the accuracy of the model

Now we have trained the machine to predict based on past events we can test the accuracy of the model. To do this we use the testing set we created earlier. All we need to do is compare the number of times the Prediction in the test set matched with the prediction of the model given the same Vector. This gives us an accuracy figure and can be obtained like this:

val predictionAndLabel = test.map(p => (model.predict(p.features), p.label))

val accuracy = 1.0 * predictionAndLabel.filter(x => x._1 == x._2).count() / test.count()

Now once we have completed our application and back in the driver program we can run this println it is only in the code as I run everything into 1 log file so I can see all stdout messages.

println("accuracy = "+accuracy)

When I ran It I got: accuracy = 0.7857142857142857

Try out our own observations

now we can look at todays weather data and see how well the model predicts rain. I have two vectors I create for weather at two points in time and then I ask the model to predict rain or not

val willItRain1=(Vectors.dense(10.0,1001,30,5,270))

val willItRain2=(Vectors.dense(17.0,1050,30,5,270))

println("Tests 1 2 "+model.predict(willItRain1).toDouble+" "+model.predict(willItRain2).toDouble)

//expect Tests 1 2 1.0 0.0

People who enjoyed this article also enjoyed the following:

Naive Bayes classification AI algorithm

K-Means Clustering AI algorithm

Equity Derivatives tutorial

Fixed Income tutorial

And the following Trails:

C++Java

python

Scala

Investment Banking tutorials

HOME